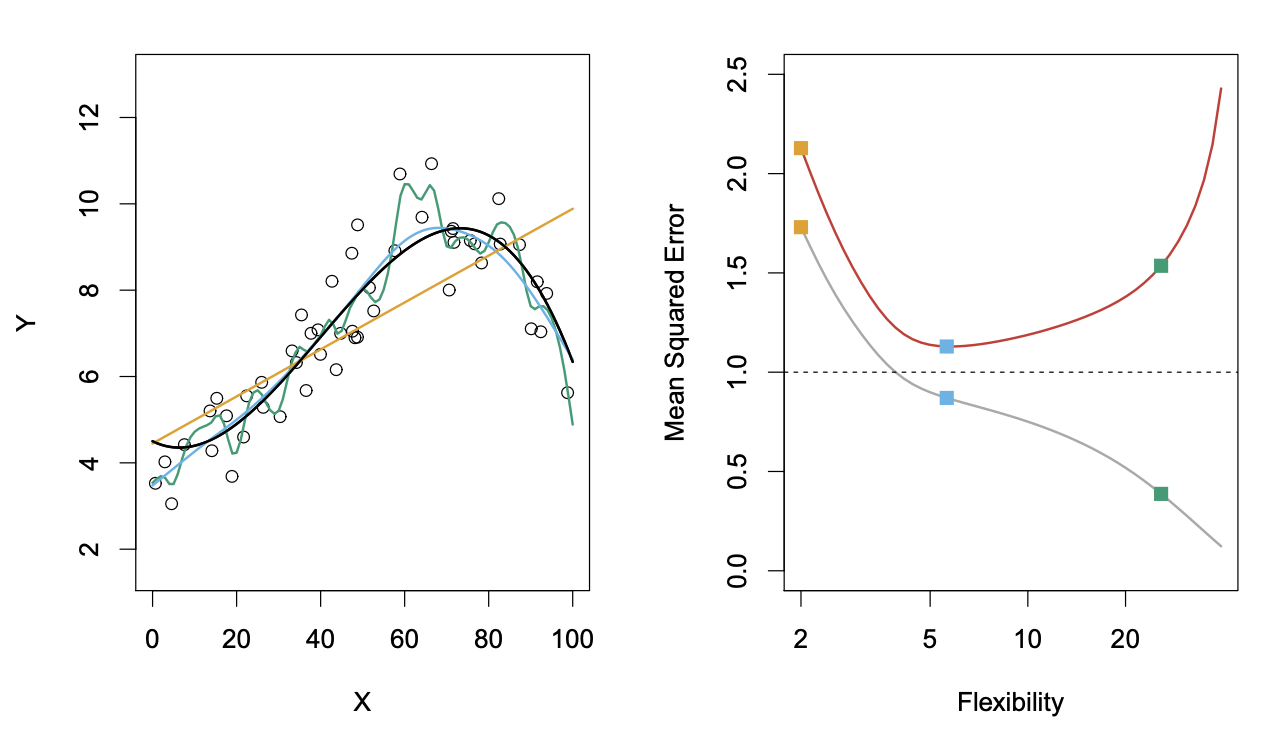

First, we should answer the question: how do we know if a model performs well? One way to do it is to compute the Mean Squared Error (MSE):

Important point is that we compute MSE by using test data that was not used to train a model. (1) Why so ? If we don’t have a test data, then one might imagine choosing a model that minimizes the MSE for a training data. (2) What problem do we face then? (3) Recall the general pattern/graph between MSE and flexibility. Hint: just explain the U-shaped graph.

Bias-Variance Trade-off

The expected test MSE can be decomposed into three fundamental quantities (no proof) like variance, bias, and variance of the error.

The last term is inherently random, and we can’t really get rid of it. Whereas first two terms can be minimized, but it’s still tricky. (4) Intuitively, what is variance and bias of (5) What’s the connection between them and flexibility? Alternatively, just explain what’s happening in the graph: black is the real , orange is linear regression.

Answers

- Variance refers to a variation of the predicted value by if we used a different data set to train it. Bias refers to an error introduced by approximation/simplification that a model uses.

- More flexible method low bias, but high variance. Not a flexible method low variance, but high bias.